Boosting Core Banking Efficiency with NVIDIA’s Agentic AI-Powered Virtual Assistants

Core banking platforms like Temenos Transact, FIS® Systematics, Fiserv DNA, Thought Machine, and Oracle FLEXCUBE are the digital backbone of modern banking. They power mission-critical business and IT operations, but their advanced implementation cycles and daily operations require specialist subject matter experts (SMEs) to run efficiently.

This blog explores how banks can apply generative AI and agentic AI through NVIDIA AI Enterprise to streamline operations, reduce manual overhead, and enhance productivity across core banking systems.

The opportunity

Different banking users, such as branch users, customer operations, business analysts, engineers, and IT operations, need extensive training and access to manuals and SMEs to utilize core banking platforms efficiently.

| Persona | What slows them down |

| Branch banking users | Can reduce reliance on lengthy manuals for basic actions, enabling faster service and minimizing errors. |

| IT ops users | Can gain greater platform visibility to resolve high-severity incidents faster and more independently. |

| Engineering teams | Can leverage internal knowledge and targeted SME inputs to identify and address system gaps more efficiently, accelerating agile change implementation. Why fine-tuning? |

Even McKinsey1 notes that banks often struggle with digital transformations due to siloed, complex IT architecture. Legacy systems burdened with technical debt and customizations slow down modernization efforts, delaying issue resolution and platform enhancements.

Solution details

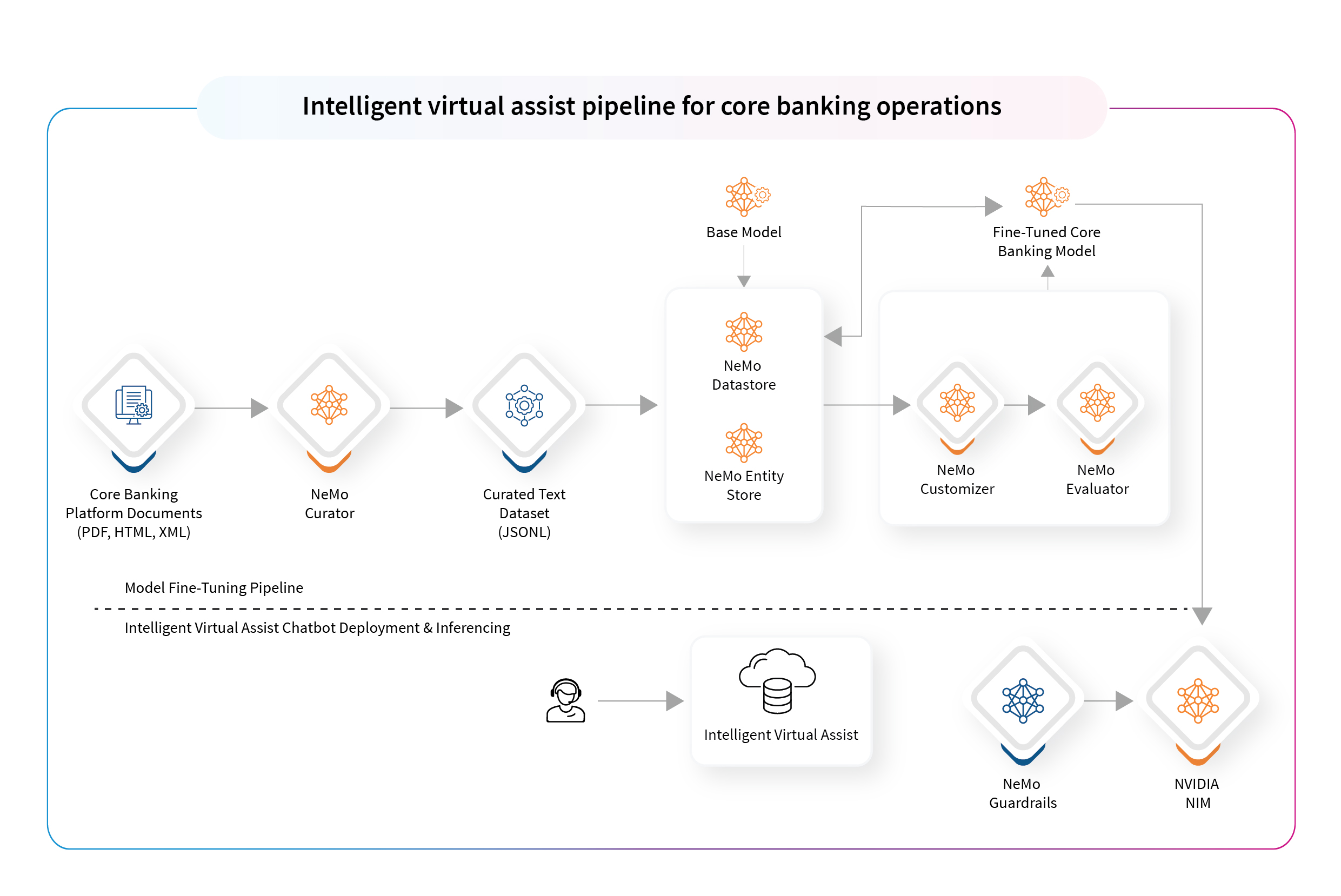

Built using NeMo, NIM, and Guardrails, the NVIDIA intelligent virtual assist is based on agentic AI and underpinned by a fine-tuned large language model (LLM) trained on core banking and platform-specific data.

NeMo™ powers the training pipeline, NIM™ ensures smooth deployment and performance, and Guardrails enforces responsible AI usage through predefined rules for inputs, outputs, and dialog behavior.

Why fine-tuning?

After evaluating multiple solution patterns, such as RAG-based architecture, fine-tuning, and pre-training a small language model (SLM), LTIMindtree, with advisory suggestions from NVIDIA, identified fine-tuning as the most effective approach given the required SME-like system knowledge.

Solution architecture visual

Figure 1: Intelligent virtual assist pipeline for core banking operations

Key components

- Model fine-tuning pipeline using NeMo framework:

To create a context-aware virtual assistant tailored to the core banking environment, the team adopted a modular fine-tuning approach using NVIDIA’s NeMo framework. This ensured domain specificity, reduced resource costs, and accelerated training time.- Data curation: Core banking platform documents were carefully selected and structured using NeMo Curator to build a domain-specific dataset for SME-like scenarios.

- Optimization techniques: The team refined the model using parameter-efficient fine-tuning (PEFT) and low-rank adaptation (LoRA). These lightweight methods significantly reduced computing cost and memory usage, allowing faster model customization without compromising accuracy.

- Model customization: The LLAMA 3.1 base model was fine-tuned using NeMo Customizer to embed contextual knowledge around banking workflows, SME decisions, and IT operations.

- Model evaluation: After training, the model was evaluated using the NeMo Evaluator, which leverages ROUGE and Perplexity scores to track comprehension, fluency, and generation quality.

- Inference using NIM and NeMo Guardrails:

To enable the secure deployment of the virtual assistant in real time, the team used NVIDIA’s NIM and Guardrails. These ensured safe model behavior, controlled outputs, and enabled high-performance inference across environments.- NVIDIA NIM containers: Deployed for running optimized GPU-based inference workloads, ensuring smooth and scalable performance across environments.

- Custom guardrails: Guardrails were defined using Colang flows to control input/output/dialog behavior, ensuring the virtual assistant responded accurately, followed safety boundaries, and stayed aligned with banking task objectives.

Why NVIDIA NeMo microservices?

- Model flexibility: Easily customize models using HTTP API endpoints.

- Deployment options: Supports both cloud and on-premises environments.

- Robust guardrails: Enforce responsible AI usage with precision.

- Performance gains: Fine-tuning time reduced by 20-25%, with no compromise on accuracy.

- Performance & benchmarks: The NeMo-powered intelligent assist demonstrated:

- Lower inference latency: Faster model execution due to optimized deployment.

- Higher throughput & accuracy: Efficient optimization & inference workflows delivered faster response times and better results.

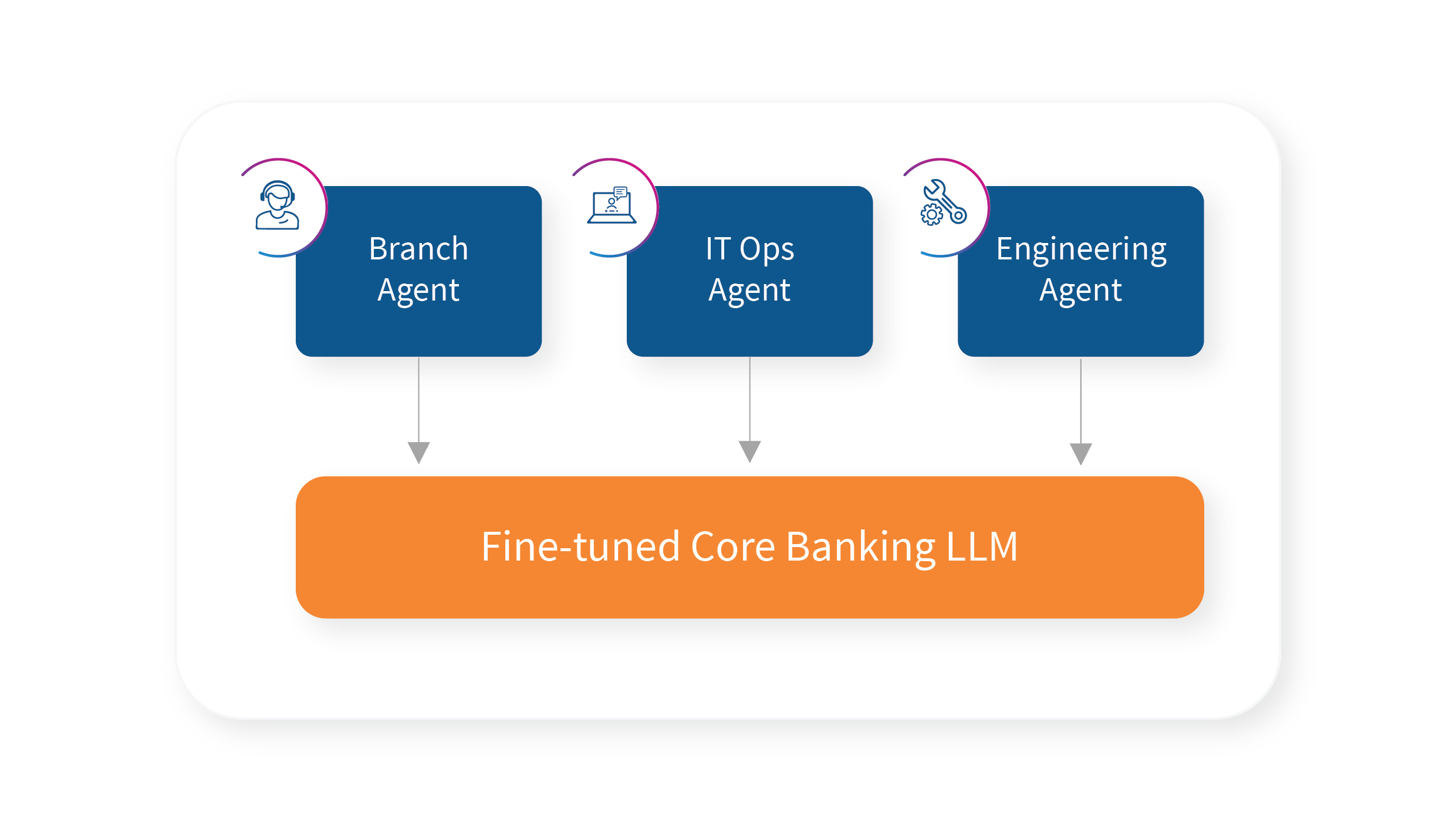

Using agentic AI to unlock full solution potential

This optimized LLM foundation can power multiple persona-specific agents to automate core banking tasks such as loan restructuring, failure prediction, system monitoring, and even code generation. Each agent contextually uses relevant core banking data, ensuring purpose-built outputs instead of generic product-level responses.

The example below shows how the LLM can be fine-tuned to support a branch agent, IT ops agent, and engineering agent, all drawing from the same core but responding to different operational needs.

To adopt this solution, technical or business users of Temenos Transact or similar core banking platforms can further fine-tune the LLM on their specific data and use cases. This ensures contextual intelligence is aligned with their system landscape.

Results and potential business impact

Implementing the intelligent virtual assist using NVIDIA’s AI stack delivers:

- Productivity gains: Improved task accuracy and reduced SME dependence.

- Enhanced user experience: Faster and more relevant output for daily operations.

- Time savings: Up to 25% time reduction through optimized processes and faster resolutions.

Why this solution matters

In today’s competitive and regulated banking environment, operational efficiency and customer satisfaction are non-negotiable. The intelligent virtual assist solution addresses the need for faster, more reliable operations by minimizing dependence on SMEs and extensive manual training.

By enabling banking staff to perform complex tasks easily and accurately, the solution drives faster issue resolution, improves service quality, and boosts workforce productivity. Enhanced user experience leads to stronger customer loyalty, while operational improvements translate to meaningful cost savings and long-term business advantage.

To explore how NVIDIA AI Enterprise and agentic AI can support your digital transformation in banking, contact us or visit our website to learn more about developing custom LLM solutions tailored to your core banking needs.

Sources

1 McKinsey, Why most digital banking transformations fail—and how to flip the odds, Akhil Babbar, April 11, 2023: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/tech-forward/why-most-digital-banking-transformations-fail-and-how-to-flip-the-odds

More from Tarun Kapoor

Today, consumers expect a better experience and more personalized offerings from their financial…

Latest Blogs

Introduction There is a lot of noise around Agentic AI right now. Every headline seems to…

Traditionally operations used to be about keeping the lights on. Today, it is about enabling…

Generative AI (Gen AI) is driving a monumental transformation in the automotive manufacturing…

Organizations are seeking ways to modernize data pipelines for better scalability, compliance,…