Eureka

LTIMindtree’s Eureka accelerates the migration of legacy data ecosystems and cloud data warehouses (DW) to Google Cloud Platform (GCP) BigQuery. It addresses the core challenges of modernizing legacy data platform for the GCP Data Cloud. The result is a transformation journey that is timely, predictable, and cost-effective, and one that de-risks and eases every step of the transition.

Eureka is a proven solution that has helped clients modernize their data platforms to BigQuery.

For instance, for an Irish-American automotive-technology supplier, it migrated over 20,000 database objects and ETLs to BigQuery. The transformation delivered approximately 30% faster time to insights, reduced operational costs, and enabled self-service analytics, all within just 13 months.

Our Framework

Analyze

Migration planning is critical to a successful data-warehouse modernization. Eureka Analyze offers intelligent insights into the source data platform, helping you formulate a robust migration plan for BigQuery while flagging technical debt and improvement opportunities.

- Object analysis: Provides complete details of the source data objects inventory including counts, size, complexity, table access patterns, candidate columns for partition and clustering, and other insights.

- Compute analysis: Supplies detailed views of compute configurations, query mix, workload analysis, query, and user access patterns.

- Debt Analysis – Provides technical debt details that can help descope non-relevant objects for migration.

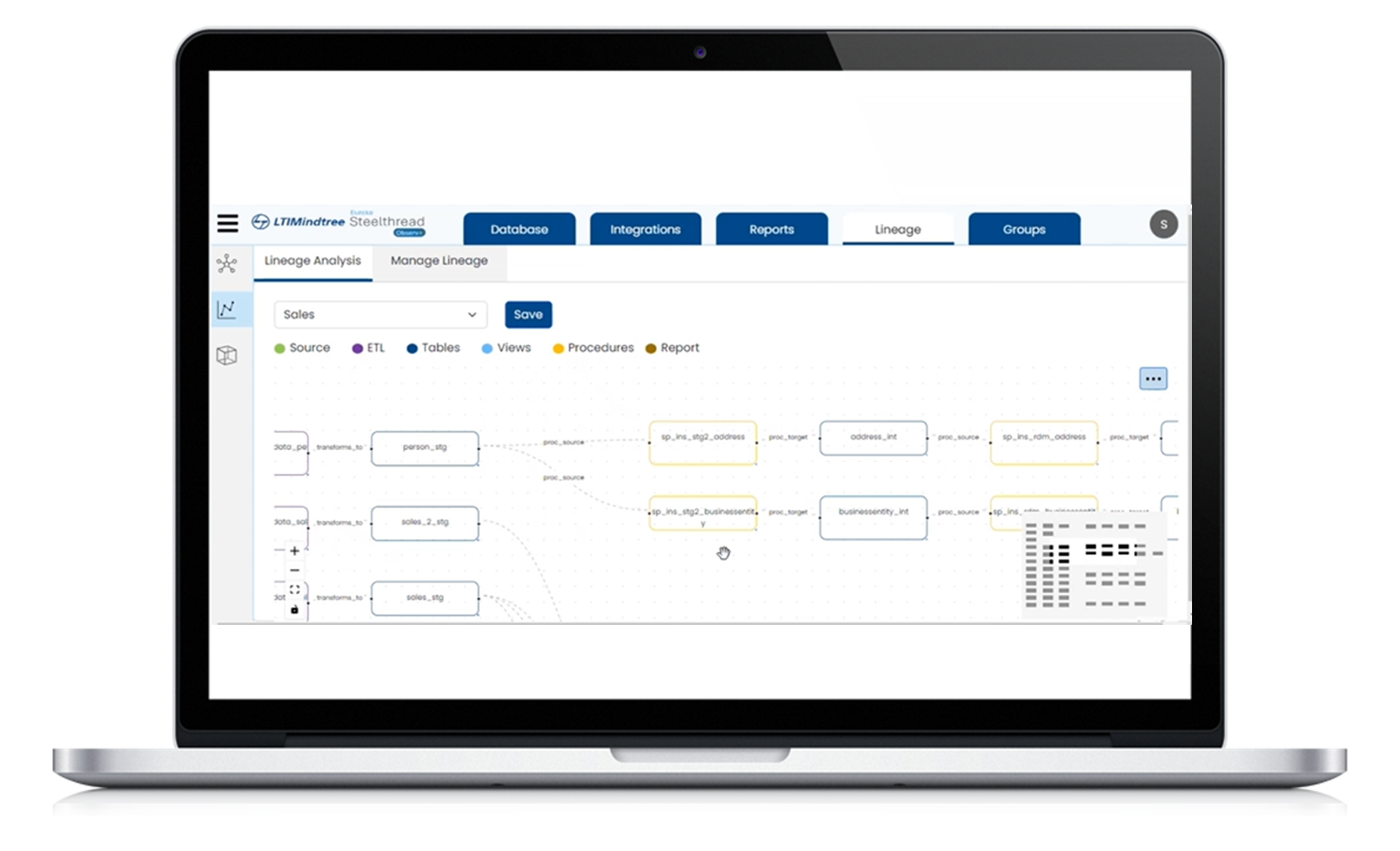

- End-to-end cross platform data lineage: Generates cross-platform lineage across databases, ETL tools, and reports, revealing object dependencies and deployment groups. It also leverages Gen AI for a comprehensive understanding of the source platform..

Migrate

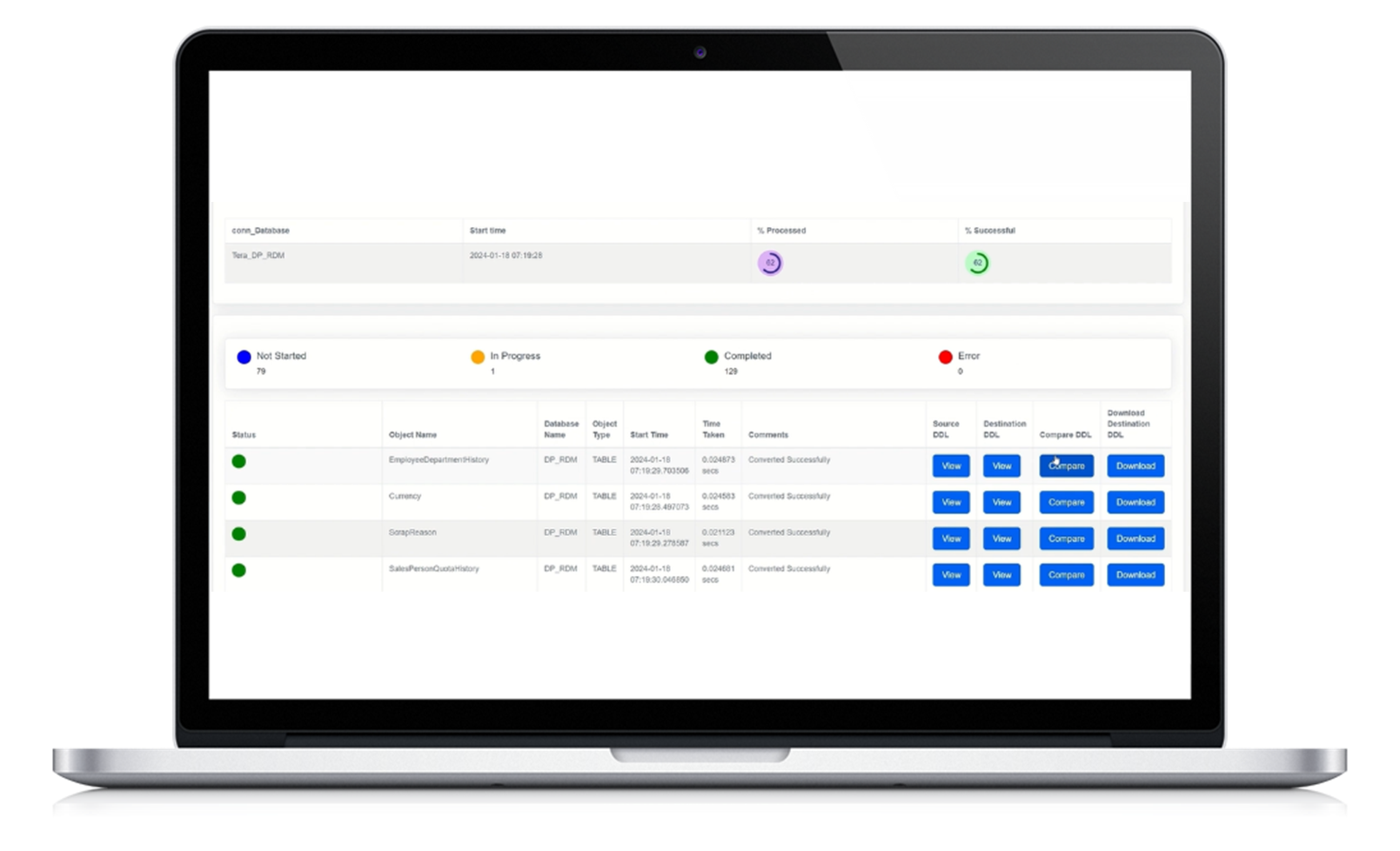

Eureka Migrate carries out the actual migration. It converts metadata, including tables, procedures, and views, to BigQuery. Subsequently, it also migrates the historical and selectively incremental data to BigQuery.

- Meta migrator: Converts the source data warehouse object metadata (procedures, tables, and views) to BigQuery SQL format and deploys the objects in BigQuery. It achieves a 50-70% accuracy in the conversion of metadata.

- ETL converter: Automatically converts ETL files from tools such as Informatica into BigQuery SQL and can shift SAS ETLs to Pyspark to modernize legacy SAS estates.

- Data migrator: Enables migration of the historical and incremental data to BigQuery and provides the ability to monitor and configure the data migration from within the tool.

Validate

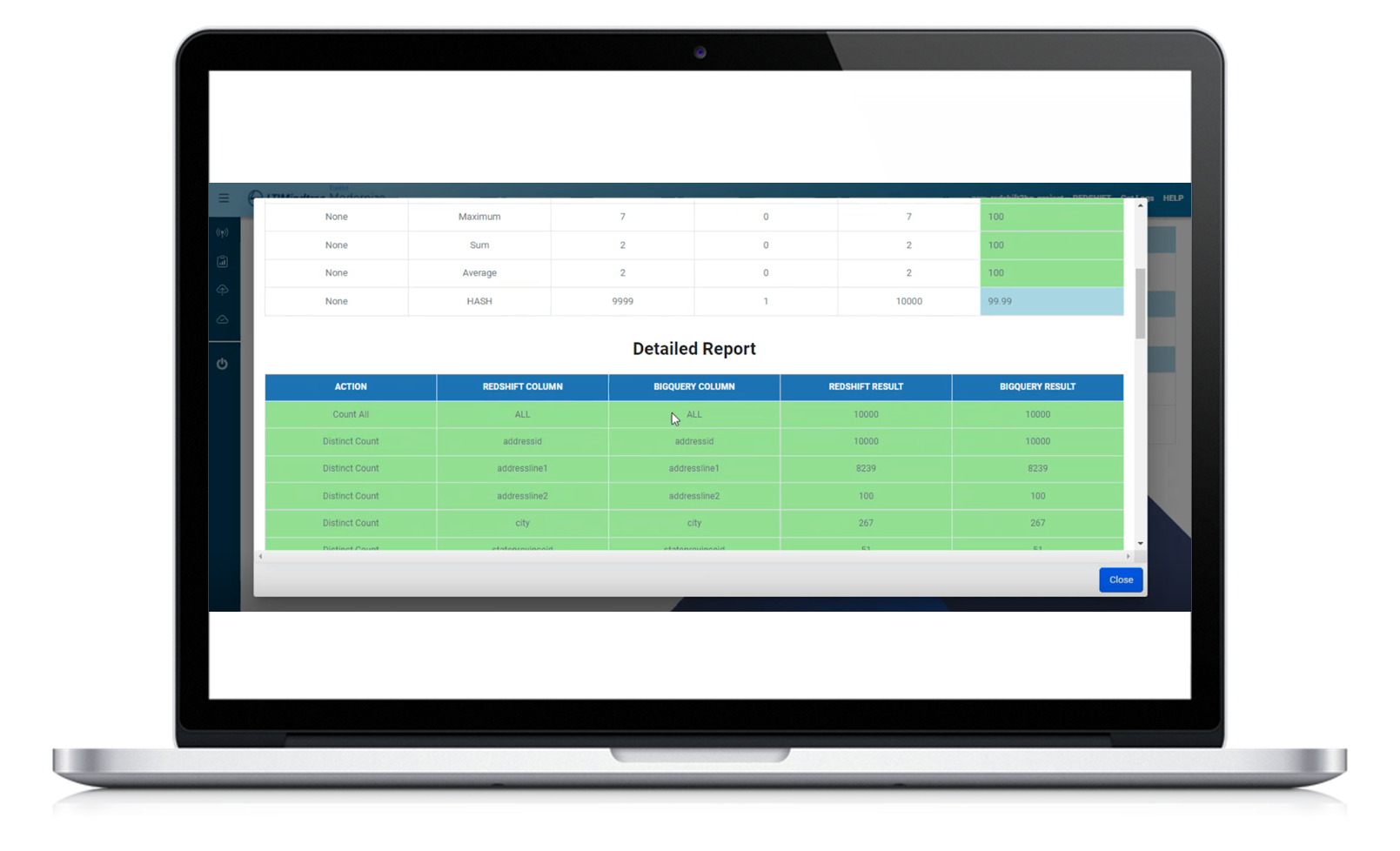

Eureka Validate facilitates high-performance data validation between the source data platform and BigQuery for both historical and incremental data. Additionally, it also minimizes the compute consumption required for validation.

- Completely automated: Eureka provides an easy-to-use UI to automatically map the source and destination tables and execute validation operations from table level to column and row levels.

- Efficient validation approach: Tool leverages a phased validation approach combined with no movement of actual data to efficiently confirm large volumes of data across the source and BigQuery.

- Highly scalable: Eureka leverages the scalability of BigQuery compute to validate the data across platforms. This reduces the need to manually set up and manage high powered VM’s for validation.

Cloud Ingest

Cloud Ingest is a metadata-driven batch-ingestion framework built on Google Cloud Data Fusion and Cloud Composer. It accelerates the addition and management of data sources by 60-70% for batch ingestion into BigQuery.

- Low code: A configuration-driven setup with reusable templates simplifies pipeline creation and management.

- Multiple source types: Supports ingestion from databases, files, and APIs for full as well as incremental loads.

- Audit logging: Creates detailed logs for every ingestion jobs executed, recording status and record counts for successes and failures.

FinOps

Eureka FinOps delivers actionable insights to optimize BigQuery spend. It provides end-to-end, granular visibility into costs and embeds best practices so that every dollar is spent wisely.

- Compute insights: Delve into BigQuery compute cost trends, uncover high-cost queries with notable slot usage, runtime, and frequency, and explore user-specific consumption patterns.

- Storage insights: Obtain information on unpartitioned tables, table archival candidates, and recommendation on switching to a cost-efficient billing tier. This is done through an analysis of BigQuery’s logical and physical storage, among other insights.

- Deploy rapidly: Eureka FinOps uses Looker Studio dashboards which can be enabled on any BigQuery environment rapidly within a few hours to start generating actionable insights.

Key Benefits

Automation:

Automation to improve data accuracy.

Migration:

Eliminate complexity and de-risk migration.

Accuracy and Consistency:

Minimize data reconciliation time.

Faster Insights:

Reduce metadata migration from weeks to hours.