Beyond Data: Building Trust for Effective AI and Decision-Making

Trust cannot exist as a one-time project or a neatly contained initiative. It’s the continuously evolving foundation of intelligent enterprise. It must be engineered into data systems, reinforced by governance, and reflected in every AI-assisted decision. Though invisible, trust anchors everything, turning information into insight and technology into impact.

In this AI-first era, the strength of every decision depends on the trusted data foundation powering it. Enterprises understand that AI can transform how they operate, yet many projects stall before delivering impact. The real barrier is rarely the algorithm; it’s the absence of trust. When data is incomplete, inconsistent, or biased, everything built on it begins to falter. Even the most advanced AI models reflect that uncertainty, producing outcomes that feel slightly off, sometimes dangerously so.

For AI to move from promise to practice, trust must be engineered into every layer of the data lifecycle: from collection to governance and security to decision-making. Building that trust is about accountability, discipline, and continuous evolution. Treating data as a living product rather than a static asset.

This blog explores what it takes to build, sustain, and scale trust: why it erodes and how a resilient, trust-first culture can become a defining trait of modern enterprises.

The Data Lifecycle: A Chain of Trust

Let’s decode a chain of trust across the data lifecycle. From its initial acquisition to its eventual retirement, data passes through multiple stages, each presenting unique opportunities (or challenges) for its integrity to be compromised.

- Data Acquisition and Ingestion: This is the gateway where data enters your systems. Whether it’s transactional data, streaming data, or confidential data, without robust checks and balances, incomplete or inaccurate data can hamper training models, affect the next action, and easily pollute the entire ecosystem. A scalable and well-designed data fabric enables seamless ingestion from multiple sources while maintaining strong security controls. By integrating large language models (LLMs) with enterprise data through retrieval-augmented generation (RAG) or specialized language models (SLMs), organizations can build expert systems that drive informed decision-making. However, this foundation must be protected from bias and poor governance. Compromised or “hallucinated” data can distort AI outputs, weaken trust, and create downstream risks that undermine the integrity of enterprise intelligence.

- Transformation and Governance: Once acquired, data is transformed, cleaned, and governed. This phase involves applying rules for data management, establishing metadata frameworks, and ensuring quality. However, I’ve also seen a lot of data duplication or inconsistent records in this phase, which can run rampant. An organization might believe it has a single “golden record” for a customer, only to find multiple, conflicting versions scattered across different systems. Training an AI model on such fragmented data is a recipe for failure, as the model’s predictions will be based on an inconsistent and inaccurate view of reality. Robust data governance for AI, spanning lineage, quality controls, and policy enforcement, prevents these inconsistencies from cascading into models and decisions

- Consumption and Decision-Making: This is where the value of data is realized. From business analysts to AI models, downstream stakeholders consume this data to generate insights and drive decisions. These users inherently trust that the information presented to them is accurate. If a sales dashboard reflects data that is four days old because of complex data pipelines, a CEO loses confidence not just in the report, but in the entire data infrastructure and AI initiative. With dependable, current inputs, teams can trust AI-powered decision-making instead of second-guessing stale dashboards.

Erosion of trust at any of these stages creates a cumulative effect. Poor data quality leads to flawed model training, which in turn produces unreliable AI-driven predictions. This cycle of neglect undermines the entire value proposition of AI and can lead to significant business risks.

AI Across Three Dimensions

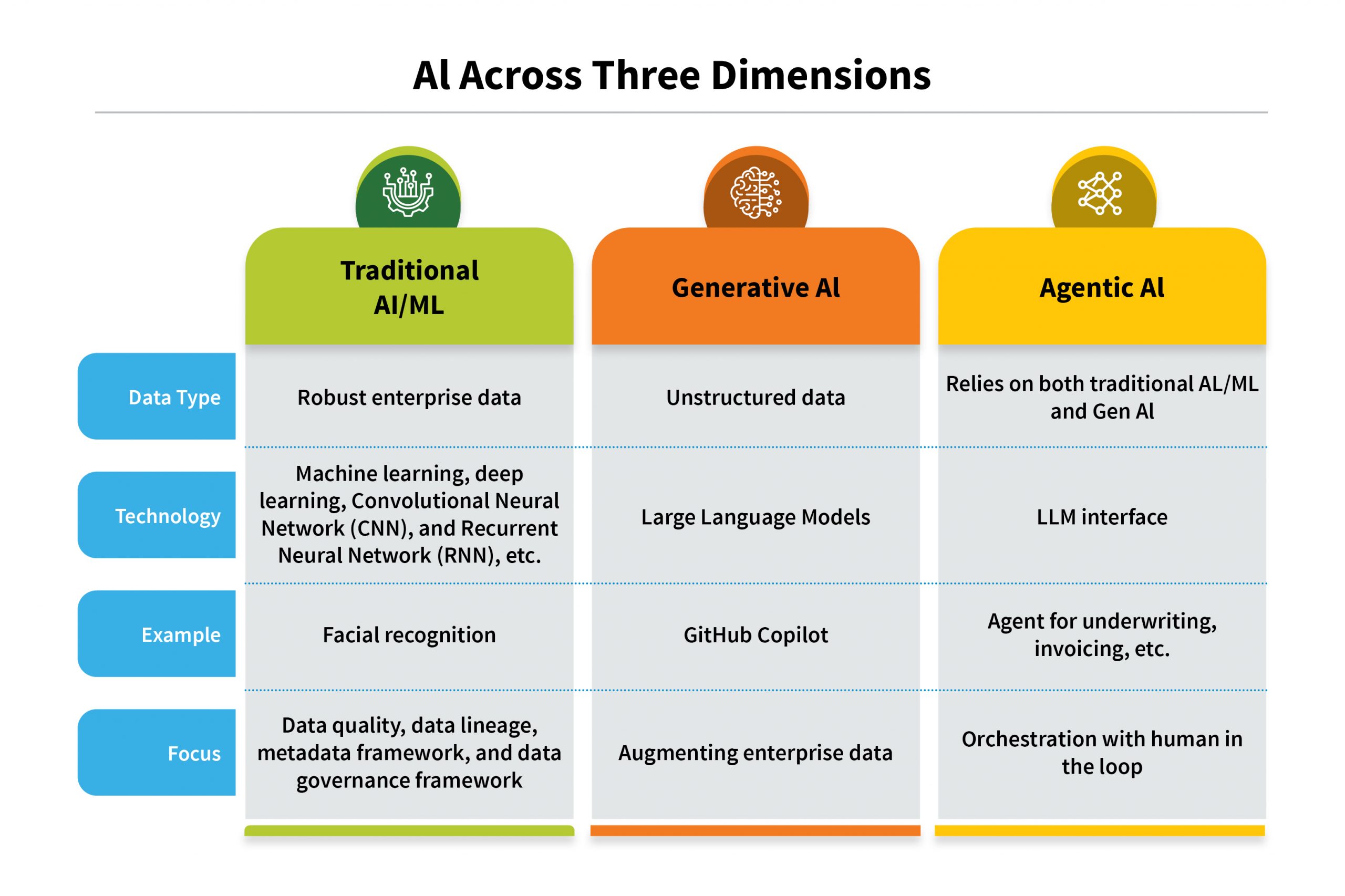

Across all three dimensions of AI, trust in the data lifecycle is the common thread that determines success. Whether it’s ensuring the accuracy of training data for traditional AI, preventing hallucinations in generative AI, or enabling ethical decision-making in agentic AI, trust must be engineered into every stage of the lifecycle. Without it, the potential of AI to transform enterprises is compromised, leading to unreliable outcomes and eroded confidence. Here’s a quick overview of data across all three dimensions: Traditional AL/ML, Generative AI, and Agentic AI.

The first dimension is where you need all your enterprise data to be robust. Data quality, data lineage, metadata framework, and data governance framework should be in place for conventional data, and that governs all enterprise data.

The second dimension has unstructured and streaming data. Consider enterprise emails, enterprise SharePoint or contact center call recordings for example. All those elements would become unstructured data, which would augment the existing enterprise data, so you need to have a bit more sophisticated mechanism for governing that.

Agents constitute the third dimension. So, how do you govern agent behavior? A robust agent governance framework is very important to analyze how agents are functioning.

Tools must be available for each of those areas right from the start. Things may go wrong from acquisition to distribution, even in the APIs that you have. This is an interesting area that will keep evolving.

The Pillars of a Trustworthy Data Foundation

To counteract the risks of trust erosion, enterprises must build their data strategy on four fundamental pillars. These principles provide a framework for measuring and enforcing trust across the organization.

- Dependability

Dependability is the assurance that data is accurate, complete, unique, and timely. It answers the question: Can we rely on this data? Achieving dependability requires enforcing the six dimensions of data quality, i.e., completeness, uniqueness, timeliness, validity, accuracy, and consistency—at the point of ingestion and throughout the lifecycle.

- Transparency

Transparency provides a clear understanding of the data’s origin, journey, and intended use. Data lineage capabilities are essential for tracing data back to its source, illuminating every transformation it has undergone. This is particularly crucial for AI explainability. When an AI model recommends a specific action, such as hiring one candidate over another, transparency allows you to understand the data points that influenced that recommendation, building confidence in the outcome.

- Accountability

Accountability establishes clear ownership for data assets. Who is responsible for this data? By defining data custodians and stewards, organizations ensure that someone is accountable for maintaining data integrity, quality, and proper formatting. This pillar shifts data management from passive to active, owned responsibility.

- Accessibility

Accessibility ensures that the right stakeholders can access the right data at the right time, under the right controls. The goal is to strike a balance between robust security and practical usability. Auditors, regulators, and business leaders all need access to reliable data to perform their functions. A well-designed data fabric can provide this secure, accessible environment, democratizing data while maintaining necessary controls.

Balancing Trust for Diverse Stakeholders

Different stakeholders have different expectations for data trust, and a well-thought-out strategy must balance these often-competing needs.

Successfully navigating these expectations requires a holistic governance framework that addresses all four pillars of trust. It is not enough to secure data if it is not accessible, nor is it valuable to make data accessible if it is not dependable.

Evolution of Trust and Explainability

About seven years ago, long before the GenAI boom, a leading FMCG client faced a classic last mile challenge: deciding which SKUs to stock across thousands of neighborhood stores. With each store carrying up to 100K SKUs, and no digital point-of-sale data, sales reps relied on instincts, often leading to inefficiencies.

LTIMindtree built a deep learning model that analyzed 160 million data points covering consumer behavior and ZIP-level demand patterns to recommend optimal assortments and identify new cross-sell and upsell opportunities. The results, validated through A/B testing across markets, confirmed measurable sales lift and improved data-driven decision-making built on iterative calibration, not algorithmic transparency.

Today, in the LLM era, explainability has become far more complex. As AI systems become more capable yet opaque, the definition of trust must evolve, from human validation to responsible, transparent machine reasoning.

The Path Forward: Cultivating a Trust-First Culture

Building trust in data isn’t a project—it’s a persistent act of responsibility woven into an organization’s daily fabric. For today’s CTOs, CIOs, and CDOs, the mandate is clear: nurture a culture where data integrity is foundational, not aspirational.

That shift starts with mindset. Data can no longer be the residue of operations; it is the raw input of intelligence and innovation. Trust grows when governance operates quietly yet effectively—through real-time quality checks, lineage tracking across AI models, and unified data architectures (like data fabrics) that bridge silos while upholding accuracy, security, and access.

In today’s digital economy, trust is the invisible currency of success. Embedding principles like dependability, transparency, accountability, and accessibility into every layer of the data ecosystem transforms AI from experimentation into a sustainable, transformative enterprise advantage.

Latest Blogs

Introduction There is a lot of noise around Agentic AI right now. Every headline seems to…

Traditionally operations used to be about keeping the lights on. Today, it is about enabling…

Generative AI (Gen AI) is driving a monumental transformation in the automotive manufacturing…

Organizations are seeking ways to modernize data pipelines for better scalability, compliance,…