Unlock Cross-Platform Insights: How GCP Dataplex Bridges with Databricks Unity Catalog

In today’s data-driven world, organizations often leverage best-of-breed tools across multiple cloud platforms. While this offers flexibility and power, it can lead to a significant challenge: metadata fragmentation. Data assets, definitions, lineage, and governance policies become scattered, creating silos that hinder discovery, trust, and efficient data utilization.

A successful architectural pattern is to build a centralized metadata management platform by integrating metadata from sources like Databricks Unity catalog (UC) into a platform built on Google Cloud’s Dataplex. In this blog, we will dive into the problem in detail, the solution approach, the implementation process, and the benefits of this approach.

The Problem: A fragmented data landscape

Imagine a data ecosystem relies heavily on both Databricks for data lake workloads (often running on AWS or Azure) and Google Cloud Platform (GCP) for various advanced analytics and data science needs. While Databricks Unity catalog provides excellent metadata management within the Databricks environment, any team working primarily in GCP lacks a single, comprehensive view for all data assets.

This led to several pain points:

- Poor data discovery: Analysts and data scientists struggle to find relevant Databricks datasets from within their GCP environment, often relying on tribal knowledge or manual searches.

- Lack of trust and consistency: Without a unified view, understanding data lineage, ownership, and definitions across platforms is difficult, leading to potential misinterpretations and duplicated effort.

- Governance gaps: Applying consistent governance policies, access controls, and data quality rules across both Databricks and GCP assets is complex and manual.

- Operational inefficiency: Data engineers spend their valuable time reconciling metadata or building bespoke, point-to-point integrations that are difficult to maintain.

- Siloed views: Different teams have incomplete pictures of the overall data landscape, hindering cross-functional collaboration and holistic analysis.

The different personas need a way to break down these silos and create a single source of truth for metadata across the key platforms.

A unified metadata platform on GCP Dataplex

A clear goal is to create a central metadata catalog that reflects assets from both the native GCP services and the Databricks environment. For our solution approach, we decided to leverage GCP Dataplex as the foundation for our unified metadata platform.

Why Dataplex?

- GCP native integration: Seamlessly catalogs native GCP resources (BigQuery, GCS, etc.).

- Centralized governance: Provides tools for data quality, security policies, and monitoring in one place.

- Scalability and extensibility: Built on GCP’s robust infrastructure, completely serverless, capable of handling growing metadata volumes and extensible via APIs.

- Simplified data discovery and metadata management with Dataplex catalog

The core idea is to ingest and synchronize critical metadata from Databricks Unity catalog into GCP Dataplex’s catalog. This wouldn’t replace Unity catalog (which remains the authoritative source for Databricks metadata) but would provide a unified view within the GCP ecosystem.

Implementation: Connecting Unity catalog to Dataplex

We explored pre-built connectors and tools to connect Databricks Unity catalog with GCP Dataplex for metadata ingestion but there is no out-of-the-box solution available. Therefore, we developed a custom connector.

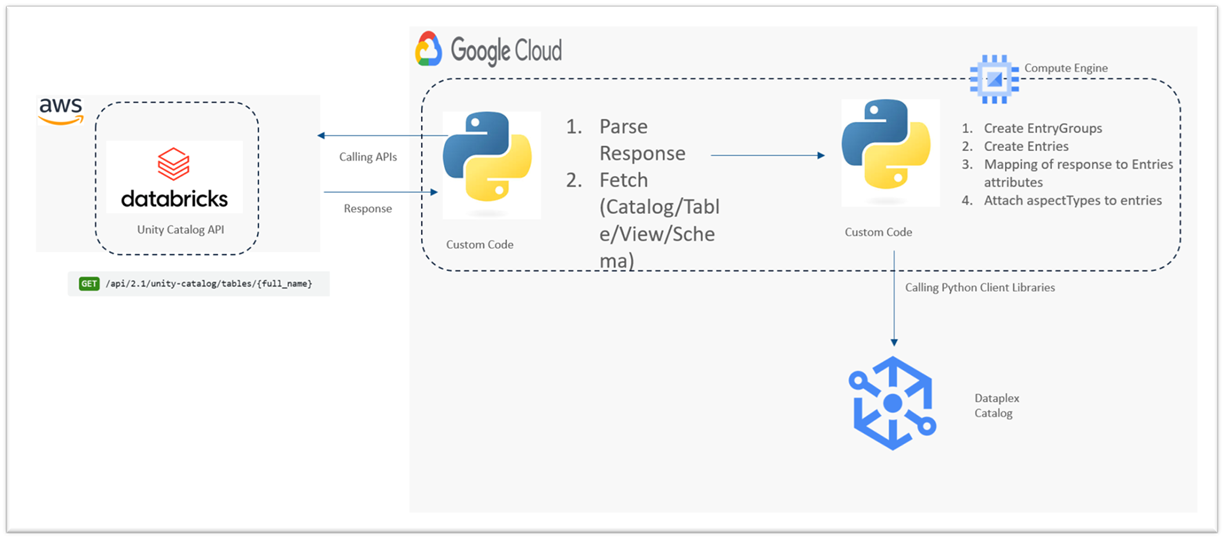

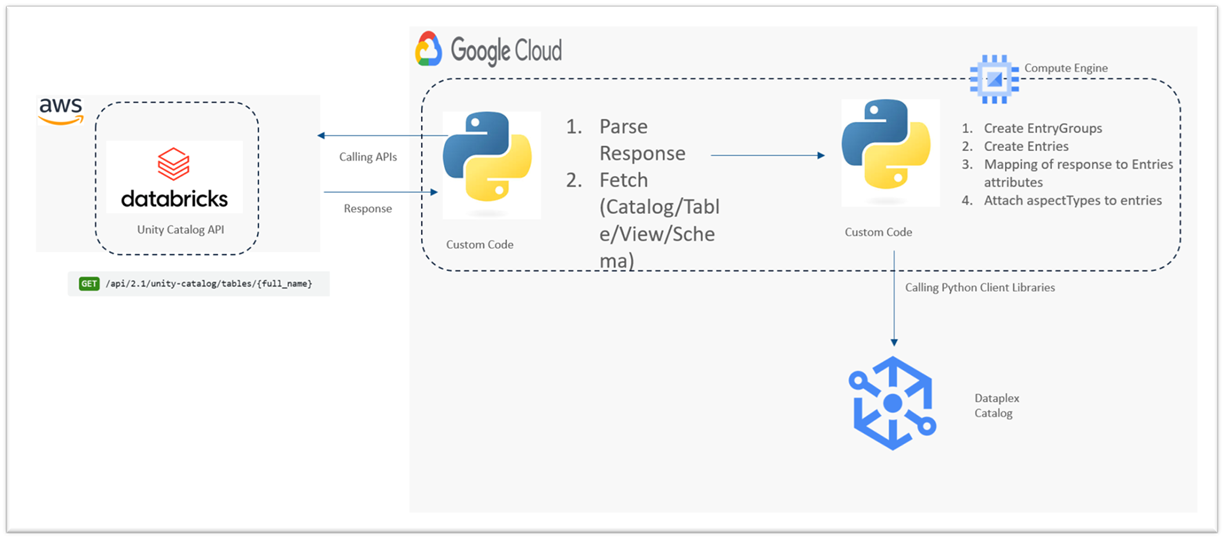

High-level reference architecture

Figure 1: High-level reference architecture

Implementation steps

Here’s a high-level overview of the architecture for the solution approach and implementation steps.

Figure 2: Solution approach

- Metadata extraction from Unity catalog

- We utilized the Databricks Unity Catalog REST APIs (or potentially the Databricks CLI) to programmatically query and extract the necessary metadata.

- Key metadata elements included: catalogs, schemas (databases), tables, views and columns (including data types and comments/descriptions).

- We developed Python scripts, to handle this extraction process which iteratively list out the catalogs and then schemas within catalogs and finally tables and views with columns within the schemas.

Figure 3: Unity Catalog get table details API reference

- Metadata transformation

- The extracted metadata format from the Unity catalog API needed to be mapped and transformed into the structure required by the GCP Data Catalog API.

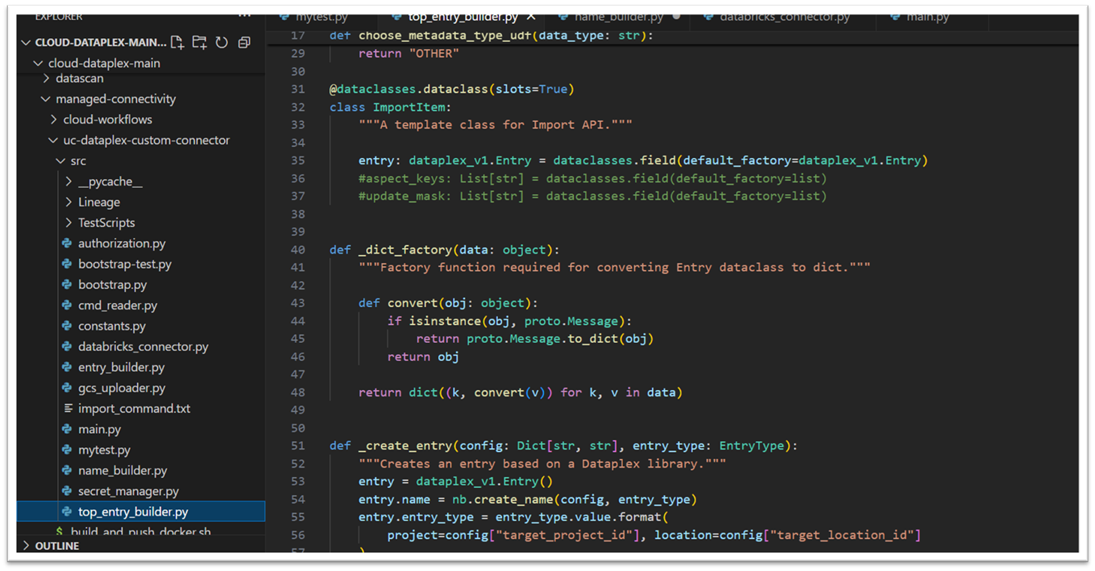

- This involved translating concepts like UC catalogs, schemas, tables, views and columns into appropriate Dataplex catalog entry groups and entries, mapping data types, and structuring descriptions and other attributes correctly. We used python scripts for transformation.

Figure 4: Code snippet for custom connector

- Metadata ingestion into Dataplex

- We used the GCP Dataplex catalog APIs and client libraries to push the transformed metadata into Dataplex catalog.

- We created a dedicated entry group within Dataplex catalog to logically separate the synchronized Databricks assets.

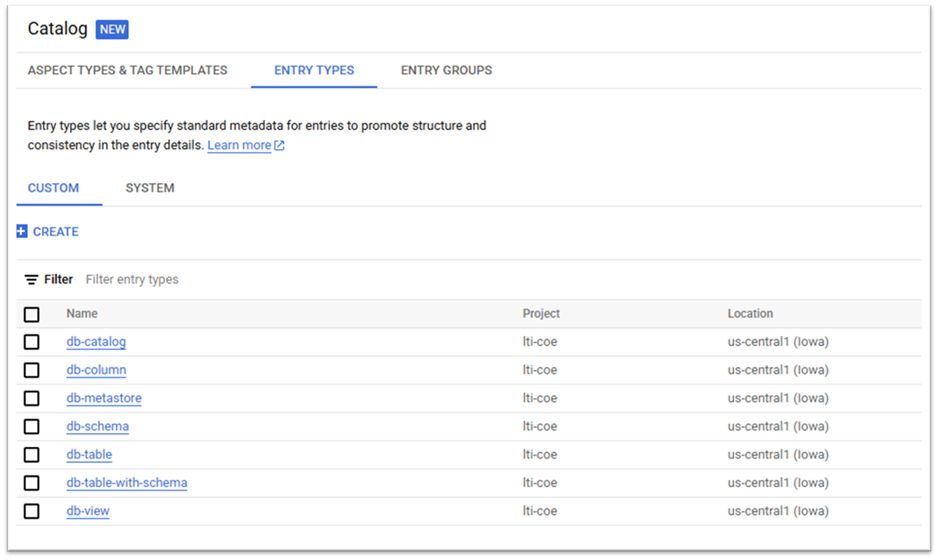

- We created custom entry types to categorically organize the different data assets and attached the attributes (aspect types) with data assets.

Figure 5: Dataplex catalog entry types

Here is an example of the metadata exported from Databricks UC to GCP Dataplex.

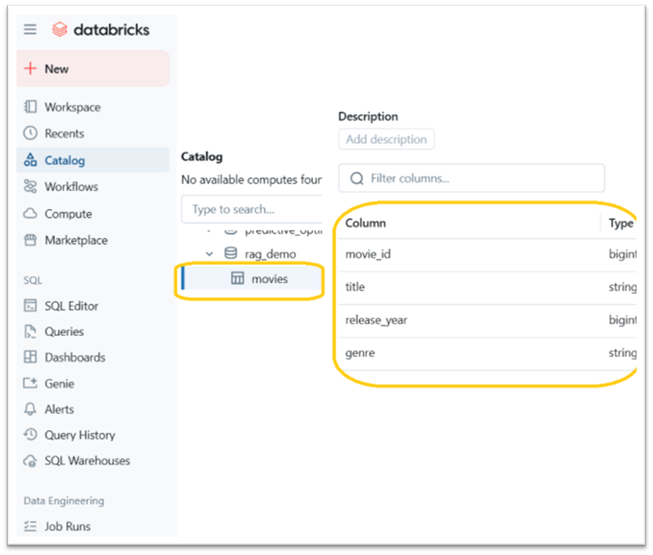

- Metadata in Databricks

Figure 6: Metadata in Databricks environment

- Metadata in GCP Dataplex

Figure 7: Metadata in GCP Dataplex catalog

Logging and monitoring were implemented using cloud logging and cloud monitoring to track pipeline health and troubleshoot errors.

Benefits: Unlocking data value

This approach for a unified metadata platform delivers significant value across the organization:

- Improved data discovery: GCP users can search and discover relevant Databricks tables directly within the familiar Dataplex or data catalog interface, significantly reducing search time.

- Enhanced data trust: A single view of metadata, including descriptions and lineage (where synced) fosters greater understanding and trust in data assets, regardless of their origin.

- Streamlined governance: Consistent data governance policies, tags (e.g., PII identification), and access controls can be viewed and potentially managed more holistically through Dataplex across both GCP and Databricks assets.

- Increased productivity: Data analysts, scientists, and engineers spend less time searching for data and understanding its context, freeing them up for higher-value analysis and development tasks.

- Holistic data landscape view: Provides leadership and data stewards with a comprehensive overview of data assets across key platforms, enabling better strategic decision-making.

- Foundation for future integration: Creates a central hub that can be extended to incorporate metadata from other sources in the future.

Conclusion

In today’s complex data landscape, the move to multi-cloud and best-of-breed platforms is creating a significant side effect: deep metadata silos. To truly harness the power of their data, enterprises can no longer afford to have critical data isolated within individual systems like Databricks, Snowflake, or Redshift.

Breaking down these silos is now a strategic necessity. A distinct trend is emerging where organizations are architecting a central, interoperable metadata plane. This involves unifying specialized catalogs with broader platforms, to create a comprehensive and trustworthy view of all data assets. This unified approach is the foundation for effective governance, democratized data access, and the agility required to win in a data-driven economy.

References:

- About data catalog management in Dataplex Universal Catalog, Google Documentation,

https://cloud.google.com/dataplex/docs/catalog-overview

- What is Unity Catalog?, Databricks Documentation,

https://docs.databricks.com/aws/en/data-governance/unity-catalog/

More from Ashutosh Rai

BigQuery essentials: Your guide to the key concepts BigQuery is a serverless platform that…

Latest Blogs

A closer look at Kimi K2 Thinking, an open agentic model that pushes autonomy, security, and…

We live in an era where data drives every strategic shift, fuels every decision, and informs…

The Evolution of Third-Party Risk: When Trust Meets Technology Not long ago, third-party risk…

Today, media and entertainment are changing quickly. The combination of artificial intelligence,…